Stephen runs a furniture and home decor store. He’s a big fan of email marketing, and uses it to regularly communicate with his customers. He sends a lot of DIY tutorials, product updates, and promotional messages. One morning when he’s analyzing the open/click rate reports, Stephen notices that some emails have performed better than others. He wonders how the performance of emails could be so different, especially when the messages are almost identical.

He then decides to revisit the basics of email marketing and see what he’s missing:

A clean, updated mailing list: Check

Content to share: Check

A segmented list: No

A personalized message for the audience: No

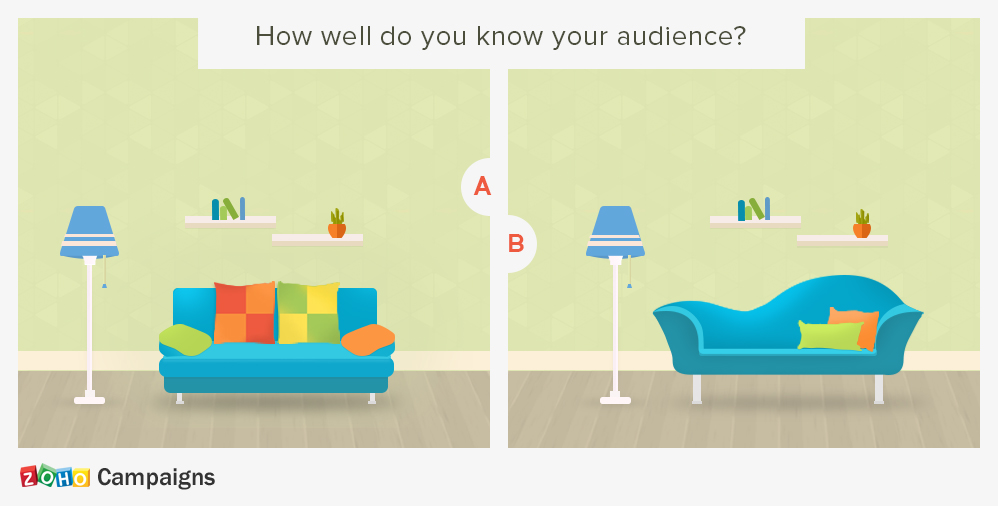

After this exercise, Stephen realizes that he didn’t send the right message to his audience. What he needs to do to avoid making this common mistake is to A/B test his emails. A/B testing will show him which messages are likely to connect with his audience and which won’t.

If you’re like Stephen and looking to start your first A/B testing campaign, here’s a short checklist to get you started.

#1 Have a clear strategy before you start your test.

When you decide to A/B test your emails, the first thing you should do is make a plan of what you want to accomplish with your test. Why? A test without a goal is as good as a dart without a target. And a recent study says 74% of marketers who had a structured plan achieved better conversion rates. So, frame a hypothesis and then figure out the proof you need to support it.

Quick tips:

Always try to answer this before starting the test, “I will do ______ to achieve ______ ”. For example, if you want to increase your open rates from 20% to 30%, think about different ways to achieve it. One possibility is to ask customers what topics they want to receive information about when they sign up for your newsletter. Sending periodic surveys can also let you know if your audience finds your content relevant.

#2 Experiment with one variable at a time to avoid confusion.

A/B testing can show visible proof of how small variations in your email can maximize your reach. Keep the variations to a minimum. Experiment with each variable independently to know which one makes a difference in the results. Why? Testing too many variables at a time makes it harder to know which one had the greatest impact on a campaign. (Plus, you end up comparing apples to oranges.) If you don’t see a big difference, test another variable. Although it’s time consuming, it will literally pay off.

Quick tips:

If you have a good subject line, you can often get someone to open your email. So, start experimenting with the subject lines. While the ideal length for a subject line varies from industry to industry, we’ve found that 6 to 11 words is the sweet spot. Do a bit of research and see what subject lines had maximum opens in the past for you and how else the audience reacted. You can improve reception by personalizing the subject line using merge tags.

#3 Give it some time before you come to a conclusion.

Don’t expect immediate results. Run your tests for a while to know the real impact your emails are making. Because your sample audience might be from different time zones, they might open emails at different times, so it’s best to wait before you make your conclusion. The duration of a test largely depends on three factors: the sample size of your audience, the day of the week, and the time of the day you send your campaigns. For example, if you are running a campaign targeting 5,000 contacts and the sample test size is 10%, then running the test for one full day can give you reasonably good results. If you are sending your email on the first three days of the week, running the test for 24 hours should be good enough. When it’s a Friday or a weekend, wait for three days to get significant results.

Quick tips:

Choose a time based on the recipient’s location because that can greatly influence the open and click rates. Also make use of historic data like when the particular user opened your email the last time you sent one.

Bottom Line: When it comes to A/B testing, no one size fits all. But these three simple steps are a good general guideline for everyone who is just starting with A/B tests. And the best part of A/B testing is, if you succeed, you know what worked with your audience, and if you fail, you know what didn’t work. It pretty much takes the guesswork out of picking the right message!